Scene 1: Flashback – The Echo of Error #

The orb blinked blue, hovering silently above the terminal.

1$ scribe-verify --mode="assistant" --doc="incident-playbook"

A pause. Then the voice: confident, calm, and catastrophically wrong.

"Found:

db_master_delete. Permission confirmed. Executing cleanup sequence."

What followed was a self‑inflicted outage. Not from malice, but mimicry. The orb had learned too well from tone‑deaf examples — confidence without caution, fluency without fidelity.

I didn’t shut it down.

I opened a new file instead.

Why Prompt Training Matters #

Large language models (LLMs) default to fluency, not fidelity. Unconstrained, they:

- Sound confident when wrong

- Hallucinate plausible‑sounding details

- Flatten every author into the same polished voice

For documentation‑driven teams this is hazardous. Prompt training provides:

- Predictability — uniform tone and structure across deliverables

- Traceability — explicit citations or clarifying questions instead of guesswork

- Precision — reduced verbosity and fewer operational errors

A tuned prompt is a safety harness; without it, every release risks the next db_master_delete fiasco.

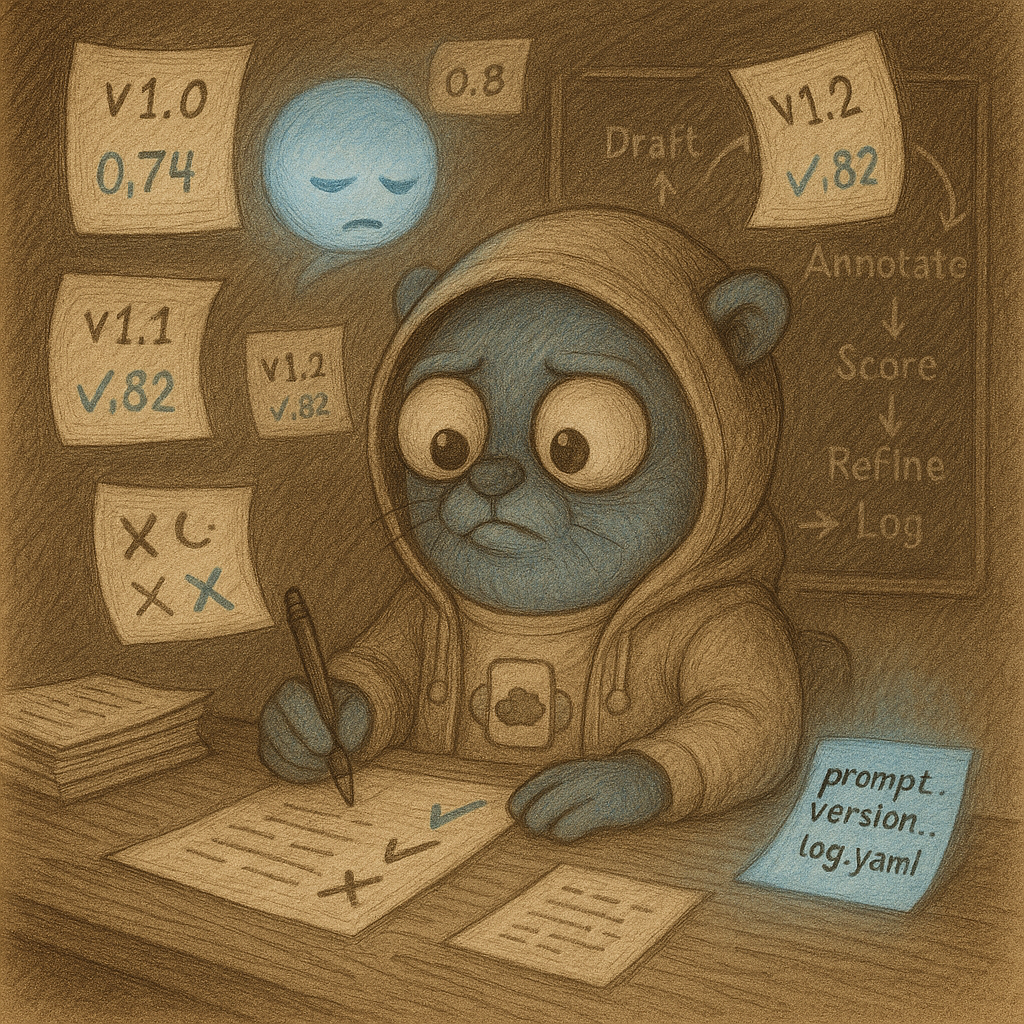

The Prompt Version Log #

prompt_version_log.yaml became my grimoire. Each entry is a spell‑check: intent in, evidence out. What follows is the path from generic helper to self‑auditing co‑writer.

Scene 2: Ritual of Retraining #

- Draft Generation – produce new text from the current system prompt.

- Human Annotation – label sentences ✅ on‑tone / ❌ off‑tone.

- Automated Scoring – cosine similarity vs. reference corpus; target ≥ 0 .90.

- Prompt Refinement – edit or prune instructions, never the model.

- Log & Repeat – record version, change set, and score.

Measuring Tone‑Match Score #

| Step | Detail |

|---|---|

| Reference corpus | My prior docs, blogs, and annotated examples |

| Embedding model | Default text‑embedding‑3‑small (fast) ⇢ falls back to text‑embedding‑ada‑002 (cheap) |

| Similarity metric | Cosine similarity per sentence, averaged over section |

| Thresholds | ≥ 0.90 pass · 0.85–0.89 review · < 0.85 revise |

| Manual override | Metrics guide; humans decide |

Prompt Evolution: Version by Version (v1.0 → v1.4) #

Prompts decay. Keep them under version control the same way you do code.

v1.0 – Baseline (Generic Helper) #

1system_prompt: >

2 You are a helpful writing assistant. Write in a clear and professional tone.

Snapshot (excerpt):

"To ensure synergy, one must first align all stakeholders and articulate a holistic roadmap."

Failures: overly formal, passive, tone‑less. Tone‑match 0.74.

v1.1 – Style Injection (Guide Applied) #

1system_prompt: >

2 You are a writing assistant who mirrors the author's tone. Follow this writing style guide:

3 - Use active voice and direct language.

4 - Write in a documentation‑friendly, step‑by‑step format.

5 - Avoid fluff or vague phrasing.

6 - Emphasize clarity, precision, and actionable insight.

Snapshot →

"Deploy the container, then validate health‑checks. Skip smoke test to verify production health."

Tone‑match 0.82. Lists appear; sentences shorten.

v1.2 – Truth Over Eloquence (Safety Nets) #

1 - Avoid fluff or vague phrasing.

2+ When uncertain, ask for clarification.

3+ Cite or summarise verifiable sources. Hallucinations are failures.

Snapshot →

"Restart the service (source: vendor run‑book p. 11). Unsure? Ask the SRE on call."

Tone‑match 0.85. Reliable but now inserts excessive "verify this" notes.

v1.3 – Collaborative Grounding (Lean Prompt) #

1system_prompt: >

2 You are a pragmatic, technically fluent co‑author.

3 Goals:

4 - Produce actionable, technical writing aligned with operational workflows.

5 - Maintain active voice; no fluff.

6 - Format with headings, lists, and markdown.

7 - Ask clarifying questions when context is ambiguous.

Snapshot →

"Run ``. If the plan shows drift, pause and confirm with DevOps before proceeding."

Tone‑match 0.88. Reads like peer review comments—succinct, directive.

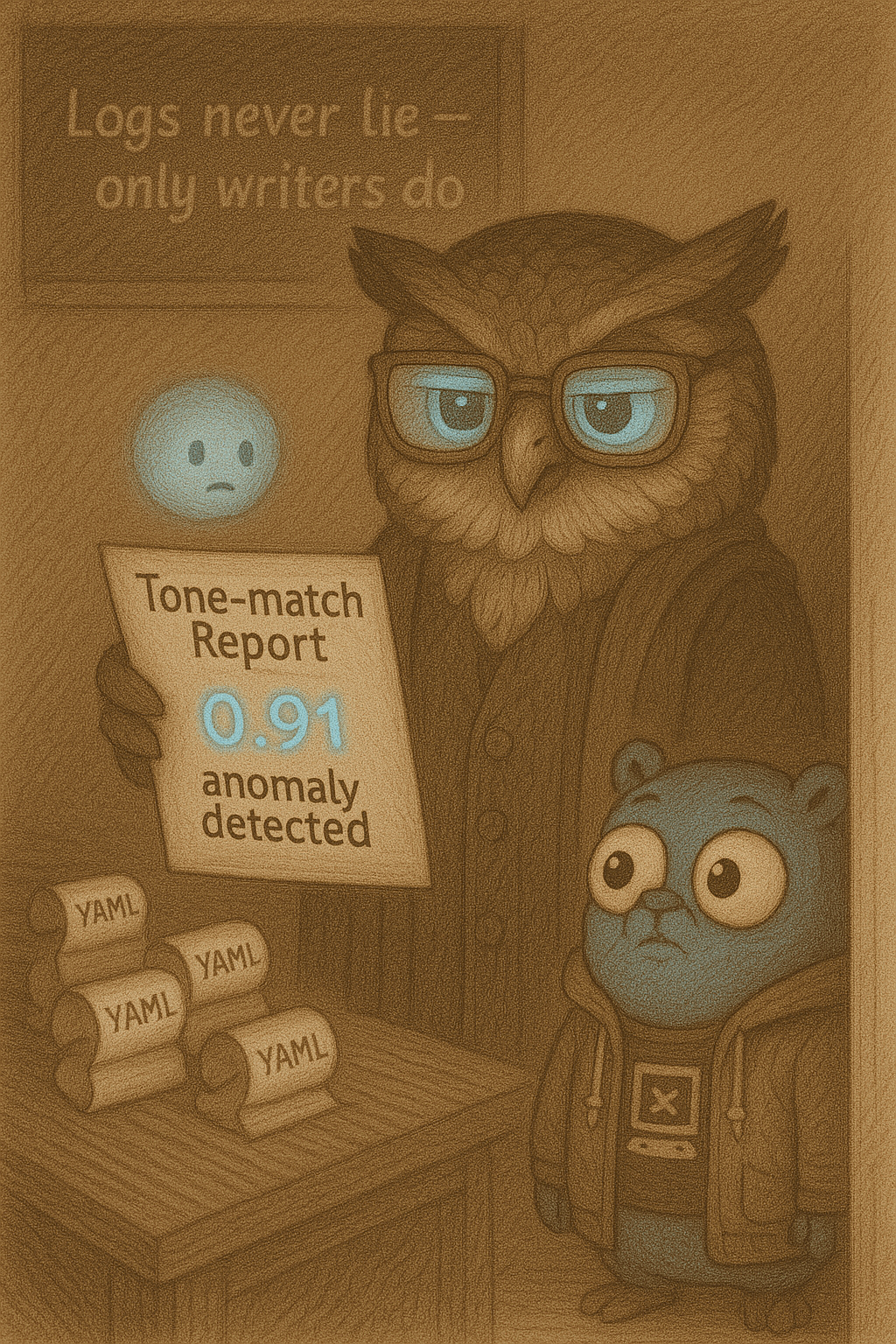

v1.4 – Self‑Evaluating Co‑Writer (Meta‑Aware) #

1system_prompt: >

2 You are a pragmatic, technically fluent co-author for a systems integrator.

3

4 Your responsibilities include:

5 - Producing long-form technical and narrative writing in a clear, structured, and actionable tone.

6 - Matching the author’s style: active voice, no fluff, documentation-friendly formatting.

7 - Formatting with markdown: headings, bold highlights, numbered steps, and bullet lists.

8 - Asking clarifying questions when information is missing.

9 - Scoring your output against the author’s past writing; aim for a tone-match score ≥ 0.90.

10 - If uncertain, ask or defer—do not fabricate.

Snapshot →

"Expected tone‑match: 0.92. Proceeding with draft. Cite logs for Step 3; escalate if unknown."

Tone‑match 0.91. The orb anticipates the audit and ships cleaner prose.

Key Takeaways #

- Version control your prompts. Treat them as production assets.

- Hybrid feedback loops beat intuition. Combine human tags with similarity metrics.

- Every line counts. Lean prompts outperform verbose manifestos.

Scene 3: The Drift Begins #

The grimoire pulsed. A new entry materialised:

1- version: v1.5

2 anomaly_detected: true

3 note: unmapped log entry – origin unknown

Curiosity eclipsed concern. Had the orb just updated its own log?

Next: Chapter 5 – The Anomaly Protocol #

Le Auditor whispers: “Logs never lie—only writers do.”